Upcoming privacy policy changes on social media platforms and other digital services, which allow user interactions with AI tools to be used for personalising ads and curating content, create a significant new cybersecurity risk by centralising a highly sensitive class of personal data.

The latest such policy announcements this week mean that conversations users have with popular AIs integrated into their daily apps – including questions, personal thoughts, and sensitive queries – will be logged and analysed to inform the ads and content they see.

While these policies, in general, state they will exclude specific sensitive topics from ad targeting, the data must still be collected and processed to be categorised. Additionally, the "right to object" mentioned in many of the notifications recently sent out is not a simple opt-out for all users. In regions with strict data privacy laws, such as the EU and UK, these policies will not be allowed to be implemented. But users in other regions may have limited recourse beyond simply not using the AI features in question.

"These policy changes mark a strategic shift in data collection, moving from observable and public behaviours like 'likes' and 'shares' to inferred user intent and private conversations," says Doros Hadjizenonos, Regional Director, Southern Africa at Fortinet. "From a security perspective, this effectively creates a concentrated 'super-honeypot' of deeply personal, psychological data. The primary concern is what happens if this new database is breached by malicious actors."

Fortinet highlights two critical security implications for users:

- A new, high-value target for data breaches: Policies such as these centralise data that is far more sensitive than traditional online user activity. It includes personal queries about health, finances, career anxieties, and family matters. For cybercriminals, this database is an invaluable target. A breach would expose not just user activity, but their private thought patterns, creating a treasure trove of exploitable information.

- Long-term risk from persistent data: Companies implementing such policies generally designate personal conversations as a persistent commercial asset for ad targeting. This raises significant questions about the data's lifecycle and a user's long-term risk exposure. This data is being collected and retained, creating a permanent record of a user's private queries that remains vulnerable to future threats.

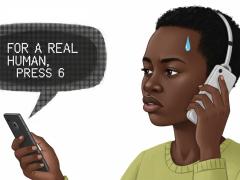

"We urge users to be highly mindful of the information they share with any integrated AI," Hadjizenonos adds. "The convenience of an AI assistant is now directly linked to the creation of a permanent, sensitive data profile. The most effective security measure is to treat these AI chats as public, not private, conversations."